How Facebook & Twitter Are Handling Post-Election Disinformation

Leading up to the final days of the 2020 election, Facebook and Twitter made a flurry of policy changes aimed at protecting the integrity of the election.

Facebook banned ads that “praise, support or represent militarized social movements,” said it would include context to posts when a candidate or party prematurely declares victory, banned ads that delegitimize the election’s outcome, and banned entities from purchasing post-Election Day political ads before a winner is officially declared. These were just the changes in the last month, and don’t include the numerous changes Facebook implemented in response to its damning civil-rights audit.

Twitter banned political ads over a year ago, but just this Monday updated its blog post to note it would protect the election’s integrity by adding informative labels to deceptive election-related tweets. The company said it would “not allow anyone” to use its platform “to manipulate or interfer[e] in elections or other civic processes.” It also said it would “label Tweets that falsely claim a win for any candidate and [would] remove Tweets that encourage violence or call for people to interfere with election results or the smooth operation of polling places.”

How the platforms address disinformation, calls to violence, misleading claims about election results, and nefarious uses of its tools and features is shifting and evolving in real time.

To an outside observer, it may seem that the platforms reacted in a decisive and swift manner as they labeled posts from President Trump and others spreading disinformation. The reality is that it took countless years of work from racial-justice and civil-rights organizations, journalists and scholars to get the platforms to this moment. The work of Change the Terms, Stop Hate for Profit, the Disinformation Defense League and hundreds of organizations that participated in Facebook’s two-year-long civil-rights audit should not be overlooked while platforms pat themselves on the back for avoiding the worst possible outcomes.

As policies and enforcement actions shift, so do the tactics from nefarious actors that quickly learn to “color within the lines” of platform policies or simply act quickly to re-create groups or pages to reorganize and re-post content. The platforms must remain vigilant and shift policies and enforcement actions to meet the moment: that may also include turning off features or adding friction to reduce the spread and virality of problematic posts.

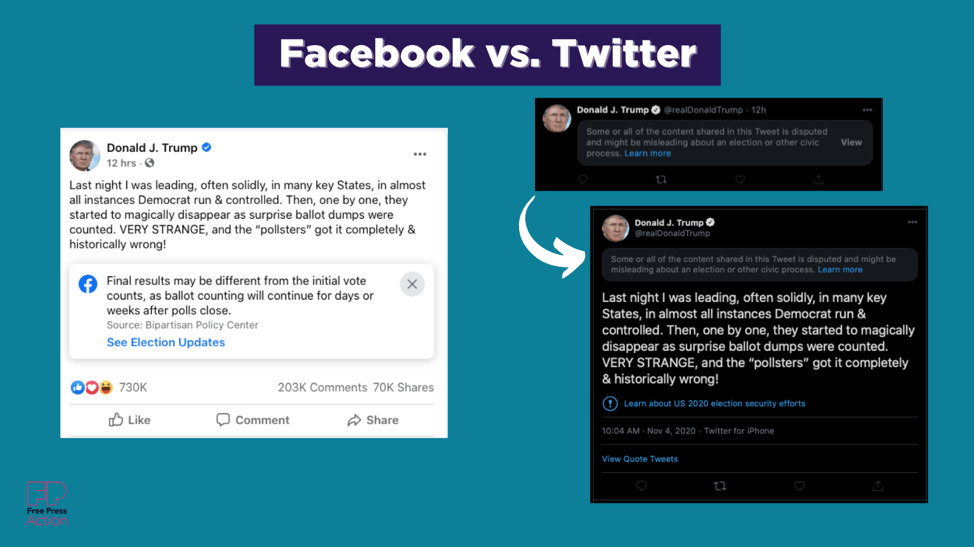

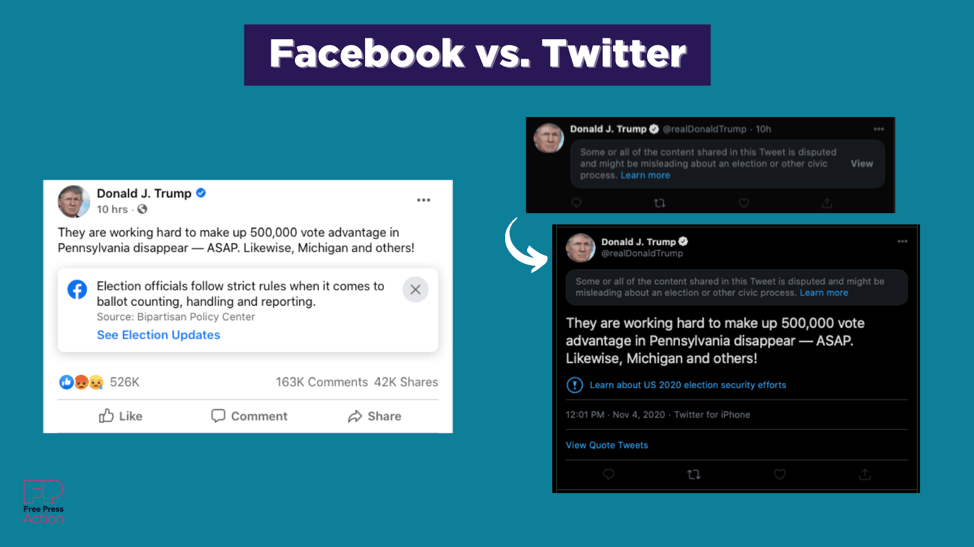

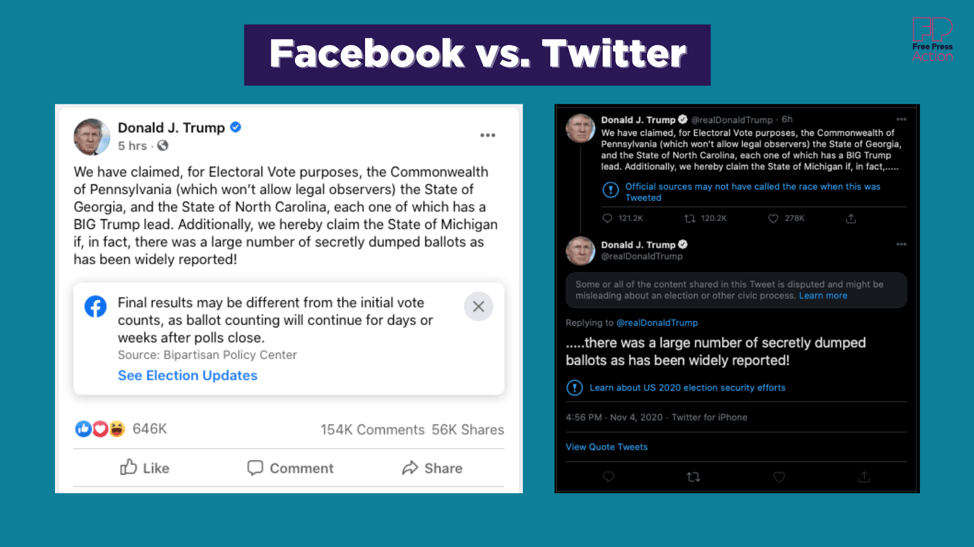

This analysis is a glimpse into a small portion of the content shared by both presidential candidates. Comparing Facebook and Twitter reveals how each company handled the exact same content.

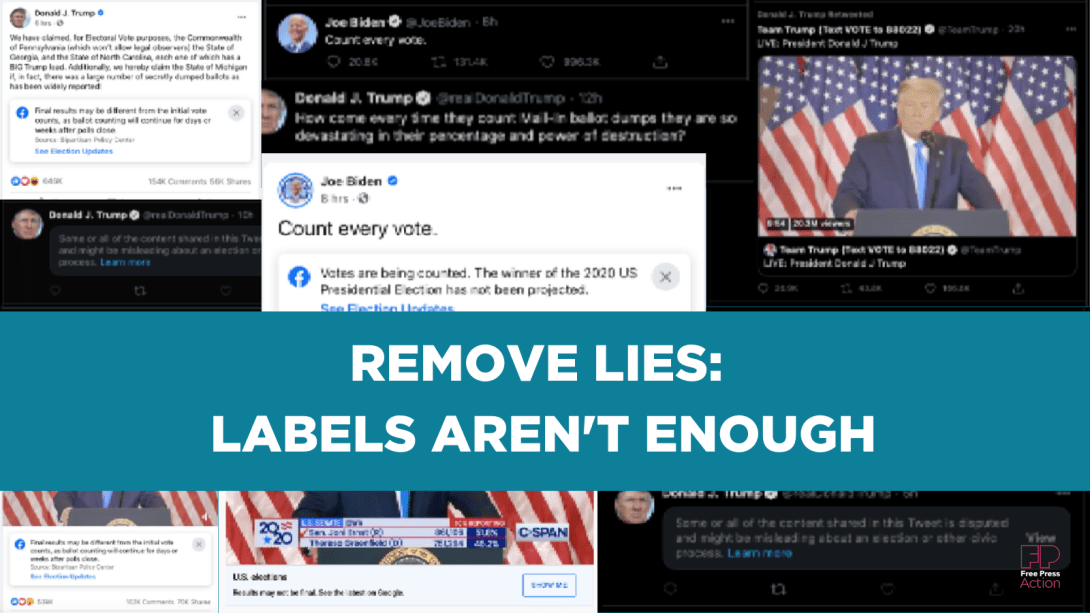

One thing is clear: Labeling posts is not enough. Misleading claims about the 2020 vote must be immediately removed.

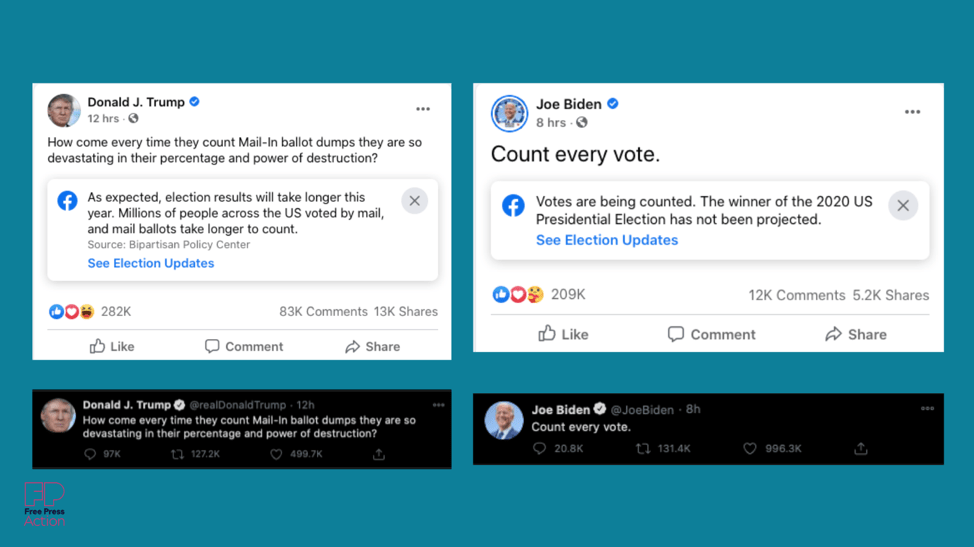

The Facebook v. Twitter label comparison

On Election Day, MoveOn researcher Natalie Martinez pointed out that according to Facebook’s own analytical tool CrowdTangle, “the top three Facebook page posts in the past 12 hours are all from Trump. All three posts contain misinformation. In total, they’ve earned almost 2.5 million interactions.”

Facebook simply added a milquetoast label without restricting features that allow the content to be shared, liked or commented on — an approach that’s done nothing to hinder the posts’ reach.

In each instance Facebook added two different labels to each of the three posts:

- “Final results may be different from the initial vote counts, as ballots counting will continue for days or weeks after polls close.”

- “Election officials follow strict rules when it comes to ballot counting, handling and reporting.”

Facebook’s labels placed each of its labels below the content and did not obscure the posts or note why they’re problematic. Notably, Facebook still kept all its engagement features turned on: People could still comment, share or react to the posts. An individual user could also easily close out and hide the label by clicking on the “X” in the top right corner on a given post — and, once removed, the labels do not reappear for that particular user.

In other words: The content is still going viral.

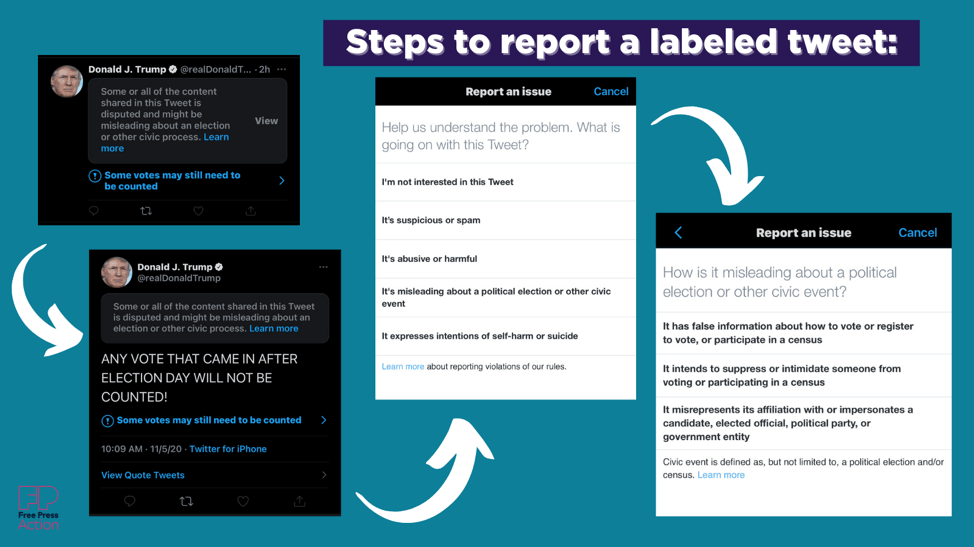

Twitter included the words “disputed” and “misleading” in its label: “Some or all of the content shared in this Tweet is disputed and might be misleading about an election or other civic process.”

In both cases, the burden is on the individual Facebook or Twitter user to decipher why the content may or may not be disputed, misleading or otherwise in violation of the company’s community standards.

Twitter used this label in a different way than Facebook did: It covered up the original content.

A user needs to click “View” to see the text; doing so brings up a new page, where the label appears above the post. These tweets were all liked and retweeted thousands of times before the label was added, but those engagement numbers no longer appear below the content. Twitter did turn off certain engagement features: The tweets can’t be liked or retweeted. However, Twitter is allowing people to Quote Retweet — meaning folks can circulate the tweets by attaching a comment above them — and others can easily find these Quote Tweets.

Twitter’s features were restricted to a degree, and because it removed the number of interactions it is unclear just exactly how viral the posts were, or continue to be.

There are also posts in which Facebook added a label and Twitter did not

In Trump’s post about so-called “mail-in ballot dumps,” Facebook’s label states: “As expected, election results will take longer this year. Millions of people across the US voted by mail, and mail ballots take longer to count.” Twitter left a similar tweet unaltered.

Facebook has added labels to a significant number of posts — not just from both presidential candidates, but from other users who are either sharing their thoughts on the election or noting whether they had voted. The labels don’t alert users that a particular post includes disinformation, but rather that it includes information (truthful or otherwise) about the election or voting.

Twitter, on the other hand, did not label any of Joe Biden’s tweets — mainly because they do not include disinformation — while Facebook included the label on several Biden posts.

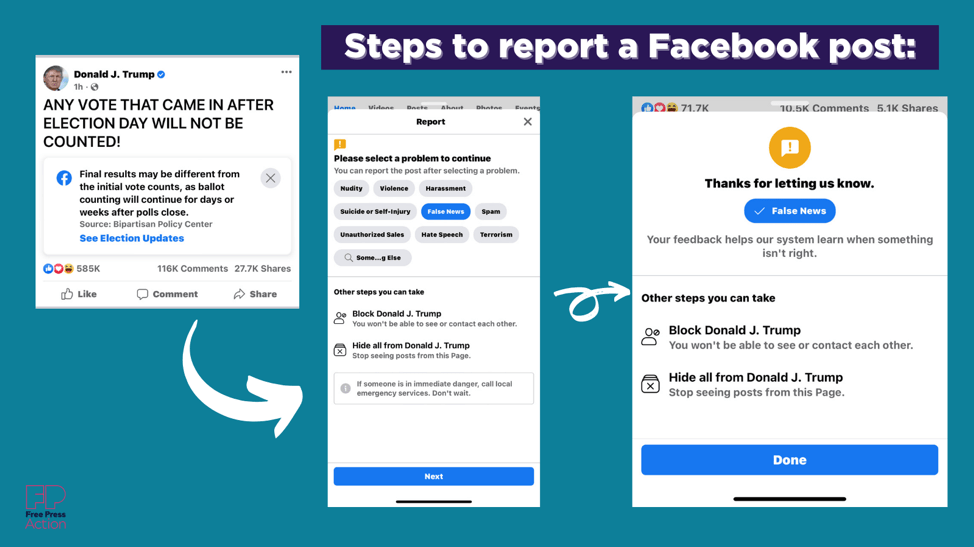

Reporting mechanisms are not keeping up with the policy changes

Both platforms have long had features that allow users to report content that violates the companies’ policies. But when it comes to election-related posts, the reporting options are not keeping pace with the changes in the platforms’ policies or the content being posted.

The platforms are asking people to play a multiple-choice game where none of the choices truly captures why the content is problematic or why it violates policies against election disinformation and undermines the democratic process.

Here is an example of identical content Trump posted on Nov. 5, 2020, on both Facebook and Twitter.

And here are your options on Twitter for the same content that in this case is already hidden behind a label:

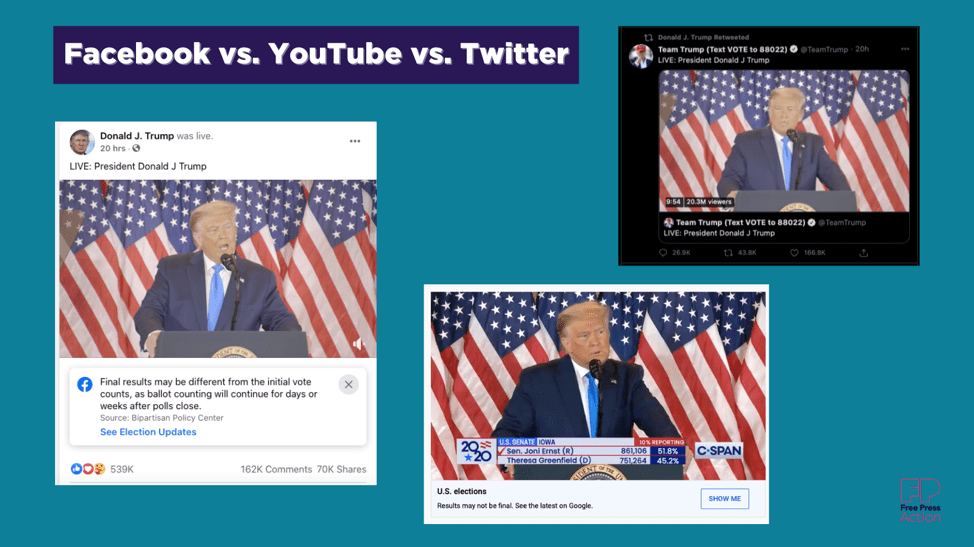

YouTube’s role in all of this

Unfortunately, it isn’t possible to do a point-by-point comparison of the content as it appeared on YouTube, except for the video of Trump’s speech prematurely declaring victory. YouTube’s preparation ahead of the election, or lack thereof, is a whole other story.

Conclusion

The impact of the label design choices demands further study. Areas meriting further analysis include whether a label shows up below or above a post (or covers the content altogether); the words used to convey information to the user; whether the labels can be removed or closed out; and what engagement features are still available on those posts.

Reports have confirmed that Facebook will “now restrict posts on both Instagram and Facebook that its systems flag as misinformation so that they are seen by fewer users.” The company also said it would limit the spread of election-related videos.

However, in this moment when our democracy is being tested, we urge the platforms to remove the content that violates its policies.

Indeed, some groups have been deplatformed and content calling for violence has been removed. But these are uncharted waters. Right now it’s clear that even the posts the platforms have labeled are still allowed to be shared — and go viral. This gives bad actors ample opportunity to weaponize narratives and spread disinformation at a pivotal moment for our democracy.